Data was collected in accordance with the principles of the Declaration of Helsinki (October, 2013). Signed informed consent was obtained from all subjects. Participants gave consent to publish the data. Approval was obtained from the Medical Ethical Committee of the Erasmus Medical Center, Rotterdam, The Netherlands (MEC-2014-573). Trial registration for the Baerveldt study: NTR4946, registered 06/01/2015, URL https://www.trialregister.nl/trial/4823. Trial registration for the UTDSAEK study: NTR4945, registered on 15-12-2014, URL https://www.trialregister.nl/trial/4805.

Grading the dataset

The 500 images with guttae were graded based on their complexity to segment them, taking two metrics into account: the amount of guttae and blur present in the image. For both metrics, images were given a value of 1 (mild), 2 (moderate), or 3 (severe), the final grade being the sum of both values. As a result, there were 134 images with low complexity (grades 1–2), 235 images with medium complexity (grades 3–4), and 131 images with high complexity (grades 5–6).

Targets and frameworks

CNN-Edge is the core of the method. If the specular image had good quality (high contrast) and with cells visible in the whole image, the resulting edge image would probably be so well inferred that a simple thresholding and skeletonization would suffice to obtain the binary segmentation. However, these issues (low contrast, blurred areas, and guttae) are present in the current images. In contrast, CNN-Body has the goal of providing a ROI image to discard areas masked by extensive guttae or blurriness.

These CNNs are trained independently and they all have the same design; thus, they are simply trained with different inputs and targets. To create the targets, we make use of the manual annotations (i.e. gold standard), which are binary images where value 1 indicates a cell edge (edges are 8-connected-pixel lines of 1 pixel width), value 0 represents a full cell body, and any area to discard (including partial cells) is given a value 0.5. If a blurred or guttae area is so small that the cell edges could be inferred by observing the surroundings, the edges are annotated instead (Fig. 2f). For all the annotated cells, we identify their vertices; this allows computing the parameter hexagonality from all cells and not only the inner cells (in the latter, HEX is computed by counting the neighboring cells, thus the cells in the periphery of the segmentation are not considered; this way of computing HEX was used in previous publications23,24,25,28,40 and it is how Topcon’s built-in software computes it). Therefore, HEX is now defined as the percentage of cells that have six vertices.

The target of the CNN-Edge only contains the cell edges from the gold standard images, which have been convolved with a 7 (times) 7 isotropic unnormalized Gaussian filter of standard deviation (SD) 1 (Fig. 2b). This provides a continuous target with thicker edges, which proved to deliver better results than binary targets23.

The target of the CNN-Body only contains the full cell bodies from the gold standard images, and partial cells are discarded either because they are partially occluded by large guttae or they are at the border of the image (Fig. 2c). The same probabilistic transformation is applied here. Alternatively, we also evaluated whether a target that also includes the edges between the cell bodies (Fig. 2d) was a better approach (named CNN-Blob).

This framework is similar to our previous approach28, where a model named CNN-ROI, whose input is the edge image, provides a binary map indicating the ROI (Fig. 2j). To create its target, the annotator would simply draw the rough area from the edge images (Fig. 2g) that they would choose as trustworthy, creating a binary target (Fig. 2e).

Backbone of the network

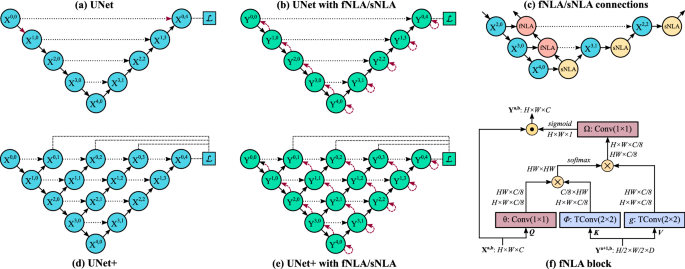

The proposed CNN has five resolution stages (Fig. 7a). We tested three designs depending on the connections of the convolutional layers within each node: consecutively (as in UNet33), with residual connections (ResUNeXt34), and with dense connections (DenseUNet35). In addition, we also explored two multiscale designs (commonly refered as + [plus] and ++ [plusplus]36) in the aforementioned networks: UNet+ (Fig. 7d), UNet++, ResNeXt+, ResNeXt++, DenseUnet+, and DenseUnet++. Our ++ designs differentiates from + ones in that the former use feature addition from all previous transition blocks of the same resolution stage. In total, nine basic networks were tested (the code for all cases can be found in our GitHub).

A schematic representation of the (a) UNet backbone and (d) its equivalent UNet+; a simplified representation of adding fNLA/sNLA blocks to the (b) UNet and (e) UNet+, where a red arrow indicates self-attention (sNLA) if it returns to the same node or feedback attention (fNLA) otherwise; (c) a schematic overview of how the fNLA/sNLA blocks are added to the UNet backbone for the three deepest resolution scales; and (f) a detailed description of an fNLA block with multiplicative aggregation: the feature maps are shown as the shape of their tensors, (X^{a,b}) is the tensor to be transformed, (Y^{a+1,b}) is the tensor from the lower resolution scale used for attention, the blue boxes ((phi) and g) indicate a 2 (times) 2 transpose convolution with strides 2, the red boxes ((theta) and (Omega)) indicate a 1 (times) 1 convolution, (oplus) denotes matrix multiplication, and (odot) denotes element-wise multiplication. In the case of sNLA, (phi) and g are also a 1 (times) 1 convolution with (X^{a,b}) as input. In attention terminology44, Q is for query, K for key, V for value.

Here, we briefly describe the network that provided the best performance: the DenseUNet. In this network, the dense nodes have 4, 8, 12, 16, or 20 convolutional blocks, depending on the resolution stage, with a growth rate (GR) of 5 feature maps per convolutional layer. Each convolutional block has a compression layer, Conv(1 (times) 1, 4(cdot)GR maps) + BRN41 + ELU42, and a growth layer, Conv(3 (times) 3, GR maps) + BRN + ELU, followed by Dropout(20%) + Concatenation (with block’s input), except the first dense block, which lacks the compression layer and dropout43 (all nodes in the first resolution stage lack dropout). The transition layer (short-connections) has Conv(1 (times) 1, (alpha) maps) + BRN + ELU, the downsampling layer has Conv(2 (times) 2, strides 2, (alpha) maps) + BRN + ELU, and the upsampling layer has ConvTranspose(2 (times) 2, strides 2, (alpha) maps) + BRN + ELU, being (alpha) = (number of blocks in previous dense block) (times) GR/2. The output of the last dense block, (X^{04}), uses a transition layer with 2 maps to provide the output of the network.

The attention mechanism

The core of the attention block (Fig. 7f) is inspired by Wang et al.’s non-local attention method44, which also resembles a scaled dot-product attention block45,46. Wang et al.’s design, which is employed in the deepest stages of a classification network, proposes a self-attention mechanism where (among other differences) Q, K, and V in Fig. 7f are the same tensor. In our case, the attention block is added at the end of each dense block and it is named feedback non-local attention (fNLA, Fig. 7f) or self-non-local attention (sNLA), depending where it is placed within the network (Fig. 7c): if there exists a tensor from a lower resolution stage, the attention mechanism makes use of it (fNLA), but in the absence of such lower tensor, a self-attention operation is performed (sNLA, where (phi) and g in Fig. 7f become a 1 (times) 1 convolution with the same input (X^{a,b})). In the DenseUNet, the nodes of the encoder use fNLA (except the deepest node), and the nodes of the decoder use sNLA (Fig. 7b); in the DenseUNet+/++, only the largest decoder use sNLA (Fig. 7e).

We also explored three types of attention mechanism depending on the type of aggregation at the end of the block: (a) the default case (Fig. 7f) uses multiplicative aggregation, where a single attention map (from (Omega)) is sigmoid activated and then element-wise multiplied to all input feature maps; (b) in the case of concatenative aggregation, (Omega) is simply an ELU activation, whose output maps (C/8 maps) are concatenated to the input features; (c) in case of additive aggregation, (Omega) becomes a 1 (times) 1 convolution with ReLU activation and C output feature maps, which are then summed to the input tensor. For comparison, Wang et al.’s model44 is an sNLA with additive aggregation.

Intuitively, an sNLA mechanism computes the response at a specific point in the feature maps as the weighted sum of the features at all positions. In fNLA, the attention block maps the input tensor against the output features from the lower dense block, thus allowing the attention mechanism to use information created further ahead in the network. Moreover, the feedback path created in the encoder allows to propagate the attention features from the deepest dense block back to the first block. While endothelial specular images do not possess such long-range dependencies (features separated by a distance of 3–4 cells do not seem to be correlated), this attention operation might be useful in the presence of large blurred areas (such as guttae). In this work, we explored the use of this attention mechanism for DenseUNet and the multiscale versions DenseUNet+/++.

Description of the postprocessing

The postprocessing aims to fix any edge discontinuity. Here, we have improved a process that was first described in a previous publication23. The steps are:

(I) We estimate the average cell size (l) by using Fourier analysis in the edge image23. As we proved in previous work25, this estimation is simple, extremely robust, and accurate.

(II) As new step, we add a perimeter to the edge image with intensity 0.5. This closes any partial…

Read More:DenseUNets with feedback non-local attention for the segmentation of specular microscopy images of the corneal

2022-08-18 09:40:53